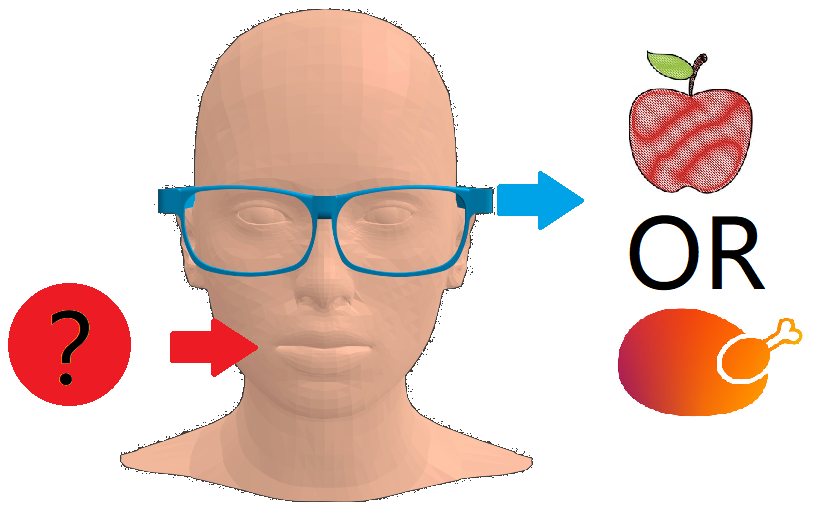

Seminar: Classifying food texture using vibration eyeglasses

Course/Project description

Chewing produces sound and this vibration also travels in the head, that’s why we can hear and estimate what we are eating. In this project, we will establish a procedure of food texture classification using eyeglasses integrated sensors (vibration sensor and microphone), as a step towards food type classification. You will record in-lab data of eating using eyeglasses prototypes and use the data for food types/texture classification. Additional interesting research questions are open to you, such as: does the size of a bite also relates to vibration?

Learning objectives

- Analysing vibration (~audio) data.

- Perform machine learning technique for texture classification (and weight regression).

Course data

| ECTS | 5 |

| Project type | Seminar |

| Presence time | lecture time: 2 SWS, exercises: 3 SWS |

| Useful knowledge | Audio processing, machine learning, Python |

| Starting date | Winter semester 2018-2019 |

Literature

Up-to-date literature recommendations are provided during the lectures.

Examination

Final presentation and final report.

Contact

Rui Zhang

- Job title: Researcher

- Address:

Henkestr. 91, Geb. 7

91052 Erlangen - Phone number: +49 9131 85-23604

- Email: rui.rui.zhang@fau.de